It's looks old but it's not! - All Information in this post still applied to modern CPUs from Intel and AMD

This post was written when i7 9700 came out. For the first time in many years, intel have decided to disable Hyper-threading in their CPUs - making i7 9700 having 8 Cores and 8 Threads, while i9-9900K has 8 Cores and 16 Threads. This has confused some people since the older i7-8700K has 6 Cores and 12 Threads - which should be "better" than 8 Threads.

Newer 12th Gen CPU also has weirder Core/Thread count that does not add-up such as 14 Cores and 20 Threads. We will write about it soon!

As for the Gen 9 CPU , the 9700K model has the same heat dissipation specs as 95W, but the number of cores has been increased from 6 to 8 cores , a 33% increase, but Simultaneous Multi Threading (SMT) capabilities are cut off. Or as Intel calls it Hyper Threading, it brings the number of hardware threads down to 8 from 12 - a 33% reduction as well!

Ok, Core / Thread, what is it? It's what it is, and then there are more Cores than Threads, or there are more Threads than Cores. Today, let's write to read a little long. Prepare coffee.

First of all, what is 1 Core?

As soon as I started writing on this topic The first thing that I have to do is to open Backup and take the files during school that the teacher taught to review the concept.

The subject that talks about this is a subject called Computer Architecture, if you read it and are interested or into this matter This subject is in the course Computer Science (Computer Science)

It brings joys to me to write this post because it's like going back to review my foundation of knowledge. And as I am also getting older with better English proficiency - going back and read the same information again of many things ib Wikipedia/Wikichip has given me better understanding and also better viewpoint.

Let's go back to the CPU "1 Core" and what it looks like. for those who do not know I have to say that the CPU doesn't mean a big black box. (Now there's a lot of flashing lights too) that we put the monitor, keyboard and mouse plugged in. Let's separately call that "case".

The full name of CPU is Central Processing Unit - Some people call it the main processor. It's part of your computer case, if you try to open the case you will find important things as follows.

- The motherboard or the motherboard that is the base it let everything related to our computer plug into it so that it connects. Open the case and we will find this piece most clearly (Ignore that RTX first~!)

- RAM (main memory) that is plugged into the motherboard.

- The CPU plugged into the motherboard as well, but we often don't see it. Because there will be a very large heat sink. or the water pump cover it up

And our SSD, HDD, video card, RGB colorful lights are not in this important thing? All of them have nothing to do with "Computer" In fact, from a CPU point of view, any other device attached to it is seen as memory. (Memory-mapped I/O) Read on to get more ideas.

Von Neumann Machine

The computers we use today was designed according to the principles of architecture. (Architecture) invented by John Von Neumann . He wrote a thought in his handwriting, 101 pages long, during a train ride to Los Alamos in 1945. At that time, we had a "Computer," reiterated that "calculator" already in use, ENIAC, but The problem with computers (especially ENIAC, I guess Von Neumann was one of the people who used it) at the time was. It is uniquely designed. We cannot easily change its "program". To change each other, it is necessary to switch to the switch and plug the cable together

But Vonn Neumann was the first to present the idea of a Stored Program Computer in writing. (Wikipedia mentions that a Stored Program Computer was mentioned before, but Vonn Neumann was the one who "design" it in detail) in a document called First Draft of a Report on the EDVAC. This Stored Program Computer allows us to develop "Programming language" can come later. Because computers designed according to this architecture allows us to create "Programs that create programs" (Compiler - eg gcc)

By John von Neumann - https://archive.org/stream/firstdraftofrepo00vonn#page/n1/mode/2up , Public Domain, Link

(Writing here reminds me of a TED Talk episode that we should let our brains go into 'Default Mode', that is, to have some free time for it to process some information. We're probably killing time with 'Screen Time' right? There are two of my friends who raised their kids without using 'screen' at all. I'm curious to see how these kids grow up :) )

Theoretically, the Vonn Neumann Architecture (also known as Machine) would look like this.

By Kapooht - Own work , CC BY-SA 3.0 , Link

Well, this computer of Vonn Neumann, consists of

- Input / Output Device - This is a device that accepts commands (Input) and displays results (Output).

- Central Processing Unit - which consists of

- Control Unit (CU) - is the one that controls the operation of the CPU again that when it has received a "command" (in First Draft document, Vonn called this Order right now we use the word "Instruction" to avoid ambiguity)

So, you might define [plus,1,2,9] to means: 1) to take the data stored in memory at position 1 plus memory position 2 and store the result in memory position 9, etc., but the CU will I don't have the ability to add numbers. That's the duty of the ALU, the CU will order the ALU again. - Arithmetic and Logic Unit (ALU) - It performs numerical calculations (Arithmetic - i.e. addition, subtraction, multiplication, division) and logical calculations (if P is true, Q is true, then P AND Q is true…), the ALU is a Fixed Program that is it can do specific things For example, if it was designed to be able to add, subtract, multiply, divide, it would only be able to just that. If you want it to find the exponent, it can't.

However, we can define that. [Exponentiation,2,1,14] means to take the data in memory position 2 raised with the data from memory position 1 which then stored in positiont 14. And when we create the CU Logic, we design the CU to order the ALU to multiply the number by many times to simulate finding an exponent.

Or if you are familiar with programming, this CU is like an "Interpreter".

- Control Unit (CU) - is the one that controls the operation of the CPU again that when it has received a "command" (in First Draft document, Vonn called this Order right now we use the word "Instruction" to avoid ambiguity)

- Memory Unit - Main memory Used to store data and also the instructions that instruct the CPU to work, which make Vonn Neumann's Machine differs from the Stored Program Computer. Earlier computers are "Fixed Function" and only use Memory to read/write input and output

So we can conclude that 1 Core is that: it must have 1 CU commanding 1 ALU, And the computer is this CPU connected to the Memory Unit

Earlier I have said that SSD, HDD, GPU are not part of the computer at all :) The reason we can use these devices is because the CPU reads and writes to the main memory for a specific range to send command and read/write data to these devices. These devices are also designed so that if data appears in the memory from its position range, it has to respond and process the data/command It's like another computer that is attached to the main computer, because actually inside the SSD HDD and GPU, has its own CPU (Controller) as well.

Now you know why it have to be "Hardware reserved" !

To be a fully functional computer, the designer will specify that the "instruction set" (Instruction Set Architecture (ISA)) that the CPU can process. The most common ones are x86, AMD64 (which is 64-bit version of x86), and ARM.

Before we go any further, let's summarize what we know first:

- CPU consists of CU and ALU .

- The CPU operates according to "instructions" that are stored in memory, or MU.

- That instruction will instruct the CPU to work, which will instruct the CU to command ALU to do operations.

- Since the CPU can also write to main memory, it means that the next instruction it will execute may be changed with the previous instruction. (Self-modifying program!!! - that is why we always dreamt of AI, which is essentially a program which can adapt its own code)

with this concept Was taken to further until it came out as Core i7, Ryzen and Snapdragon but if we want to understand what is Core and what is Thread, then we need to know another thing, Pipeline .

Pipeline

When we try to put Vonn Neumann's idea into a CPU, we'll find that tasks for CU can be divided even further (to make it smaller and simpler) into smaller steps which these task It will be a Fixed Function tasks, i.e. doing the same thing over and over again for all instructions.

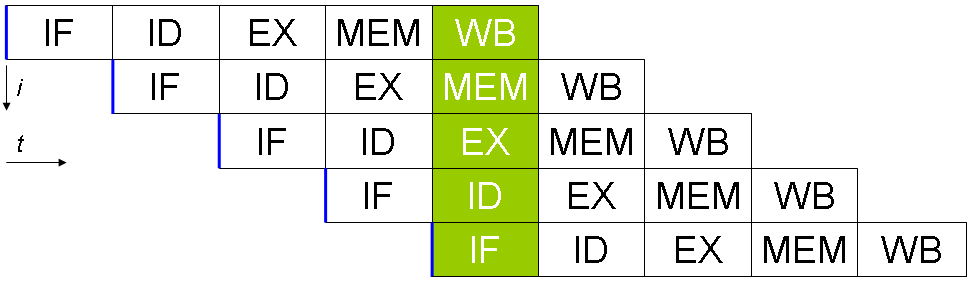

For simplicity we will look at the MIPS architecture, for every instruction sent to the CPU, it can be divided into steps like this.

- IF - Instruction Fetch is to read (Fetch) Instruction from memory. There is also a memory the CPU called Program Counter Register (PC) to determine the position in memory that CPU will read data from. And of course, we can create program such that it changes the value of this PC Register as well.

(Note that Register is not Cache, in ISA it will tell you how many Registers like this that we can use) - ID - Instruction Decode. After reading the Instruction, then we will have to make sense of it. That is to know what CPU have to do for this piece of information that it read from memory.

- EX-Execute Once CU understand what to do, it will do it in this step, eg add, subtract, multiply, divide or Logical things like AND and OR.

- MEM - Memory Access is the process of "reading" data from the main memory.

For MIPS ISAs in this example, the CPU could not directly "add" data to memory, it must first read the numbers to be added (Operands) into the Registers, then add it will add the Value inside the Register with another Register and Store in another Register. If you look at how children add numbers, they also do similar steps to this :)

What about instruction that does not Read from Memory? Things related to MEM will be idle during this period (this is KEYWORD) - WB - Write Back is a step where CPU write data from Register to memory. As with the MEM procedure, if the CPU is executing the ADD instruction (which data already in Registers) Things related to this will be idle.

In fact, if CPU is executing ADD instruction - both WB and MEM state will have nothing to do!

To visualize this, think of the conveyor belt in the factory. That when we put raw materials - it moves through 5 different machines. When I think about this, I think of the game Purble Place in Windows 7. Strangely, it has 5 Stage as well.

Using Purble Place as an example, a cake is like an instruction that runs through a different machine. that serves to squeeze the cream and decorate the cake

and when the cake goes through the various steps It will be a complete cake come out at the end.

If we wait until one cake is finished, then make another cake. We will have very low efficiency. Assuming that 1 cycle of each machine is 1 second, we will produce less than 1 cake per second (This isInstruction Per Cycle. - IPC). This kind of performance level is called Subscalar Performance. That is in one cycle, you finished less than 1 instruction.

The cycle is the "Clock Speed" of the CPU. If this cake factory is a CPU, it is a CPU that runs at 1 Hz or 1 time per second, but the real CPU now. It's already running at 4-5 GHz, meaning its 1 cycle is just one fifth of a billionth of a second, or in just 1 second, 5 billion things is happenning on the CPU.

So to make use of all idling machinery, we would normally send cake orders right next to each other. The steps are not related to each other (no data dependency between each other) so that all machines in the factory can work at the same time - producing cake every 1 second.

So, just after ramp up of 5 seconds (when the first cake is not yet reached the last machine, it's called "Ramp up" in factory terms) - we get Scalar Performance, which means 1 IPC = 1 Instruction Per Cycle (or Clock).

In the university, this is the standard images used to teach students (The cake type is more beautiful, right? :D)

This kind of work is called Pipelining :)

In conclusion, this ISA has 5 Pipeline Stages, meaning this machine has 1 (Hardware) Thread and this kind of work where instruction are executed one after another is called: In-Order-Execution

If interested, you can read more CPU with a short pipeline like this. Most of them are RISC - Reduced Instruction Set Computer. Intel/AMD x86 CPU is called CISC - Complex Instruction Set Computer, but they are actually very adcaned. It's essentially an RISC CPU which that Emulate CISC!!!

This is the reason why we don't have an x86 CPU that is as energy-efficient as ARM in mobile phones because, by ISA, x86 is CISC, making its instructions more complicated. The working cycle is complicated as well, and there are also OISC - One Instruction Set Computer and ZISC - Zero Instruction Set Computer .

And there's a lot more involved in this concept - there are: Pipeline Stall and Branch Prediction, as well as why NetBurst Micro Architecture 10 years ago was a case study when I was in Computer Architecture, it was slower than AMD. and difficult to scale (partly Pipeline Stall and Brach mis prediction Penalty) despite the fact that NetBurst's in-house technology is so dazzling. If you are interested, go ahead and read about it - it is a very fun read.

Factory owners would not want anyone to be unemployed.

It can be seen that when we work in the In Order Execution style, which is to continue in sequence, we will find that there are some commands that some circuits (Functional Unit in the CPU or Machinery in the factory) are not used.

If in the case of the cake game just now Machine number 3 is a machine that squeezes cream in the middle between the two layers of cake. If it is a single layer cake Or is it a cake that doesn't need cream between the layers? it will be idling and waste time.

What should we do to make those FU fully utilized? We must not work in order.

The principle is that instead of designing the CPU to fetch one instruction at a time and work until the end , it will fetch many instructions at once (block of instruction), and with the magic of complex algorithms on the CPU, it will solve the order on which instructions in the block must be executed in order to use the function unit as much as possible, while still maintaining the same result as if the instruction was executed sequentially.

This method is called Out-Of-Order Execution, In-Order Retire, or OoO .

(Actually, in Pebble Place game, we also have to do OoO on medium and high difficulty levels!!)

Let me throw the Block Diagram of CPU Skylake (which Kaby Lake, Coffe Lake are still using the same architecture) from the WikiChip website ( Click to see the full image on Wikichip )

From the picture you can see that It's much more complicated than the IF ID EX MEM WB we just looked at recently. And from the picture compared to the Vonn Neumann Machine, at first it can be seen that in the "Execution Unit" section, there are 4 ALUs, but they are not the same, and there is an AGU which is used to access the memory - although the CPU theoretically only need 1 ALU.

AGU: Addregress Agreegation Unit - In this modern times, memory access are always "ADDED" because many programs share main memory. For example , with 16GB of RAM, the program can use all 16GB (or more) even if another program is running, and the OS will manage to make it possible. With a system called Paging

The part that must be added (Offset) is when the program may request to use memory position number 5 (Address No. 5), but in the actual memory, the OS may divide the memory into several ranges (Page) - Position 5 of this program is not really the 5th position on memory anymore, but an Offset from the starting point of this program.

For example, if there are 30 positions of memory, the OS can divided into 3 ranges (that is, 3 pages), the 5th position of the program, which is placed in the 3rd range, will be the 20 + 5 = 25 channel in the memory

The ultimate is Simultaneous Multithreading (SMT).

And the ultimate endeavor of using as many functional units as possible concurrently is that one core can run multiple programs at the same time. (for clarity: 'thread' is Software Thread, THREAD is hardware thread on CPU)

Because when we create a thread in our program. It is not always necessary that That thread is always running - with many factors such as memory usage which is much slower than the CPU, causing the thread to wait for data from memory to arrive, or even communications between threads ( thread Synchronization ) to avoid conflicts. During this "waiting" thread will be asleep.

The moment this thread is asleep, the CPU's internal Functional Unit (THREAD) is idle - which free up for usage by other. This is the concept of Simulteneous Multithreading (SMT) technique - that is 1 core CPU (with 1 THREAD) can receive instructions from more than one thread and then let the Out-of-Order Execution system work to allocate resources that are freed from the thread's wait. to another thread that is ready to run instead.

You might be wondering how is it possible for the CPU to be able to run multiple programs at the same time? Because every Instruction has to write data to Register and both programs (Process) do not know each other.

Plus, in the view of the program - there is only one program running on the CPU again (when we write program, we dont have to worry about how many other programs are running at the same time) which means the program can use all Registers as specified by the ISA without having to share it with others

So, how the program will not overwrite the Register or read the Register of another program?

This is when we specify how many threads this core will have. Suppose if we want this Core Design to has 2 THREADs, we will need to design the Core (in the Block Diagram picture shown is 1 Core) to have space for storing 2 of the entire set of ISA registers to make it look like the two programs each have their own core, even though they actually uses the same ALU, AGU.

Intel® hyper threading technology from Amirali Sharifian

And it is not necessary that a single core has only 2 Threads, we can make a single Core have more than 2 Threads, the highest ever designed is up to 128 Threads per Core . But the instruction distribution technique is different from the SMT that I talk about in this blog.

Also, note that the program will not always resides on the same THREAD or even same CPU Core, because it is the duty of the OS to find the most optimizal place for program and its thread The fact that our CPU has 12 Threads means that the OS can allocate all 12 threads to be able to work simultaneously. It is not necessary that the 12 threads have to be from different programs or belong to the same program as well.

In other words, OS must also know that this CPU is 2 THREADs/Core (Hyper-Threading Aware) so that it can allocate threads from the same program to work on the same Core as each Core will have data from Memory (L1, L2 Cache) of its own separately, if the OS places threads from the same program on multiple Cores, or jumping between cores during its lifetime - the CPU has to reload data for the threads every time that it change cores (which is why now there is an L3 Cache, which is the cache shared by all cores). Moreover, Jumping cores also create another problem - Cache Coherency

Let's summarize for a better understanding. :)

- Core is a CPU with a fully built-in Functional Unit. Instructions can be processed.

- To make full use of the Functional Unit Our first attempt is to use pipelining technique, which is to try to divide the CPU into smaller functional units so that it runs simultaneously with multiple instructions.

- Out-of-Order Execution is a technique that extends from Pipelining to allow the CU in the CPU to allocate which instruction should be done first, instead of executing instruction sequentially as it was provided.

- Theoretically, a CPU with 1 core has 1 THREAD, since it can process one thread of a program at a time.

- Simultaneous Multithreading is a technique for maximizing the utilization of a functional unit that extends from Out-of-Order Execution by increasing the total number of registers of the ISA to be multiples of the number of threads required by the core - in order to allow more than 1 thread to be executed at the same time.

- THREAD doesn't actually exist in the core, it's just an assumption. When a CPU has 2 THREADs, it's called having 2 Logical Processors in 1 Physical Processor.

So just adding one more set of registers, how much does it increase the efficiency?

Since the CPU is running so fast right now (Actuallt ALU in some Intel CPU is running at 2x the speed of clockspeed - that is 5GHz CPU is running ALU at 10GHz!!!) - just a little bit of free time can vastly improve efficiency - but by how much?

Of course, for all performance questions, no one could give a definitive answers. As there are important variables Programs that are used to test their own performance as well The hardest part is that the ALU is not equally capable. To calculate it without actually testing it. almost impossible Because the job scheduling on the CPU (Scheduler), it will try to find a way to fill the jobs that every Functional Unit uses from the jobs that are available at that time, which the result will vary according to the jobs sent to it to be queued.

As for Intel's SMT technology (HyperThreading), Intel claims it's 15-30% more efficient than a CPU of the same number of cores without Hyper Threading.

Let's compare the results from the website PassMark first. But I'm not sure how big the error is since K CPU can be overclocked and skew the result - but let's just use it as reference point.

8600K vs 8700K difference 24% (when using all 4 Core 8600K Turbo at 4.1GHz, 8700K at 4.3GHz, if adjusted the clock speed difference, the performance gain would be about 20%)

7600K vs 7700K difference 31%

(when using all 4 Core 7600K Turbo at 4.0GHz, 7700K is at 4.4GHz, if adjusted the clock speed difference, the performance gain would be about 20%)

6600K vs 6700K difference 37%

(Default when using 4 Core 6600K Turbo at 3.6GHz, 6700K at 4.0GHz, if adjusted the clock speed difference, the performance gain would be about 27%)

But more interesting is that between the i3-8350K which is 4 Core / 4 Threads, compared to the i5-8600K which has 6 Core / 6 Threads, or 50% more core count, increased performance. is about 38%

8350K vs 8600K Difference 38%

(Default at 6 Core 8600K Turbo at 4.1, 2.5% higher than i3)

And it is worth noting that the i7-9700K chooses to increase the number of Cores 33% from 6 Core to 8 Core, but does not enable HT, causing the Thread to have 33% less, or the reverse view is i7-8700K, which It has 6 cores, but with HT it might be as powerful as the i7-9700K, because the HT technology should give the 8700K around 30% performance.

Passmark test results are just like that.

8700K vs 9700K difference 8.6%

(Default when using all Core 8700K Turbo at 4.3GHz, 9700K at 4.7GHz, higher than 9% )

But it must be remembered that Another part that will affect performance is the speed (GHz) of the Core itself, with the 9700K having an advantage at a clock speed higher than 9%, and it's definitely more than 9%.

So, is it better to have more cores or more threads?

I tried to take data from Passmark and comparing it to the G4520, which is a 2-core CPU with a speed of 3.6GHz to compare the amount of Core/Thread increased regardless of the speed increase.

The result is probably close to what people expect, if 8 Core, it should be 4 times the 2 Core and 6 Core should be 3 times the 2 Core. I'm sure Intel have though about this before setting the speed and pricing of each CPU as the graph shows.

And if we assume that the effect of Clock Speed on the score is related 1:1 (which is not true. This is done for fun in different generations of chips, it can't be compared anyway) It will get a result which is quite close to the theoretical possibility as well due to adding threads (We agreed that threads are Software Threads). Will not give results that increase 1:1 because it will have to waste time doing it. Increased synchronization, as if we had two SLI graphics cards together, the speed wasn't always doubled.

From the two graphs, we can conclude that

- The number of cores affects performance the most. Almost equal to the number of cores increased. But if we cut off the increase in speed of the new chip too (randomly repeating again), the rate of increase is also reduced, for example 2C->4C increases 100%, but from 4->6 only increases 34% and from 6->8 only increased by 20%

- SMT (HyperThread) is about 15-20% effective as Intel actually says, and with a lower boost rate, SMT is probably better than adding cores as it is more economical. less heat Because it's just adding one more register, Intel claims it takes up only 5% more space on the chip, but gets 15-20% more efficiency.

And to answer questions: More cores Better or more THREAD Better? - for Intel, they are clever to release the CPU that is ACTUALLY faster than previous generation already. So in this case i7-9700K is a little bit faster even though it has less THREADs, or so I dare to say, comprable to i7-8700K which has more THREADS