What is SSD?

SSD is shorted from Solid-State Drive, literally it means the drive is SOLID. (No moving parts)

The SSD was invented about 40 years ago by Storage Technology Corporation, founded by a team of engineers spun off from IBM, and is now a subsidiary of Oracle. The current name is Oracle StorageTek which they now sell tape drives.

The first SSD made has VERY HIGH SPEED OF... 1.5MB/s!!!

But in fact, the world's first SSD 40 years ago is still almost 2 times faster than the current HDD~

The difference between SSDs from other storage methods is that, in most cases, the storage of data in computers has to do with disks, tapes, and magnets - which always require a mechanism to move something.

(That is, it's not Solid, it's just Hard.)

But before we can understand why SSDs were invented, we need to understand the basic storage principles.

How to store data on your computer...it's not just files.

"Storage" for a computer is to copy the data in the memory (RAM) which is the main memory (Primary Storage) of the computer to keep in the Secondary Storage where it will not be lost.

The way we understand it is to "Save" (from the data being lost!) data into File. When we wanted continue working with this data, we read the data from the file into memory again.

We're probably used to that: Saving a file is that it has to have a file that we can name - which is a Logical view of data. But in this post we will go deeper than that. That is, how we store that file on the disks Physically - or how your bits and bytes are kept from being lost on the disk.

As for the first technology that was used to store data for computers was tape. Just in case you are not born in 1980 - this is the picture of Tapes - with gigantic storage of 0.1GB and 0.4GB!!! (120MB and 400GB uncompressed)

By Photograph: Journey234 - Own work , Public Domain, Link

Needless to say, for the past 20 years, 400MB is a huge amount. The way the tape works is It must have a way to read it Also known as Head, which will be in the tape reader.

This will be a translator of magnetic energy pasted on the tape into digital data (0101...) to be sent to the computer again. And then there's the tape spinning motor which the tape run through the head of the reader and so on. While the tape is moving through the reading head, the information continued to flow (It's the origin of the word Stream in computer programming languages!). For writing, the opposite is true - the head create magnetic field on the tape based on data passed to it by computer.

If you have used Walkman or Listen to music on Tapes before, you will understand that there is no "Skip" If you want to listen to the next song, it is necessary to "rewind" the tape, that is, we can read data from the tape in one direction only (Sequential Access) - either forward or backward and beginning to the end.

Tape is not suitable for storing data that require Jumps (Random Access). If you read some information then realized that the file you need is at the end of the tape, you might have to rewind the tape several hundred meters Like the tape below, which is 182 meters in length!. The time we have to wait for the tape to be rewinded until the part we will read is called Seek Time.

By Alecv - Own work , CC BY-SA 3.0 , Link

So how do we go about jumping to the point we need to read faster? (or reduce Seek Time) We cut the tape into several sections and lined them up

This format is called Track, where in a tape there is multiple smaller tapes inside on line of tape, and then we increase the number of Read/Write Head,

The seek time is now shorter, but we still does not solve the issue of worst case scenario - that is it is still a long time if we wanted to jump from very beginning of the tape to very end of the tape!

So how do you make the distance even shorter~? Stitch the front and back of the tape tape together and it becomes a Disc - It's like a tape that never ends!

This Disc

By Heron2/MistWiz - modified version of Disk-structure.svg by MistWiz, Public Domain, Link

When it's a Disk, storing data will be a little more fun. It is usually divided into a CHS or cylinder-head-sector like this.

- Sector is the smaller part of the tape labeled B in the figure .

- Cluster is a group of sectors, labeled D in the picture, remember this one.

- A series of sectors in the same circle on the disk is called Track , which is A in the figure.

- And since we can stack disks on top of each other, the same sector in the same spot for all disks is called Cylinder , which is C in the figure.

- Finally, we there can be more than one head, one for each disc that was stacked together.

(Plus: When to use Disc, when to use Disk )

That is, we solved all the tape problems at the same time and made the tape go back to the original place by itself as well as reducing the amount of time for jumping to read data. It also increases the speed of reading data because we can stack disks on top of each other and use multiple heads to read data in the same cylinder again at the same time. This should be the peak of data storage of human kind!?

By No machine-readable author provided. Ed g2s assumed (based on copyright claims). - No machine-readable source provided. Own work assumed (based on copyright claims)., CC BY-SA 3.0 , Link

Why is Hard Disk (HDD) so slow?

When Windows/Linux/MacOS reads the data, it has to translate position of the data it wants into the Cluster (this word again) into CHS, that is where the location of cluster is on the Disc Physically. CPU (Controller) on the HDD then will translate that information and control the read head of the Drive (which will make clicking sound) to position it over the required CHS position.

The reason why the OS when reading data is read as a cluster is because in a file system such as FAT32 or NTFS, the smallest unit of storage (Allocation Unit Size) is a cluster. If you try to create a small file, you will see that even though the file size is 818 bytes. But it uses 4096 bytes on the Disk. As for the reason why it has to be 4096 as well, you could try reading further.

But let's see that In order to read 1 cluster data from HDD like this, what does it have to "wait" for?

- Seek Time - The Seek Time of the HDD is the time it takes to move the head to match the CHS position it wanted to read. It takes about 25/1000 seconds with current technology, or about 0.025 seconds for a full-stroke, i.e. inside-to-outside swing. Of course, on average, it will only have to move just half of that.

- Rotational Delay - It is the time that the desired sector to be read will spin to meet with the reading head. It is the reason we try to find a HDD at a higher rotation speed, such as 7200rpm or 10000rpm (rpm = Rotation per minute). Note that the Disc inside HDD is always spinning because it takes more time to spin up the Disc - so we just leave it spinning.

The faster the disc rotates, the time it takes for the desired CHS position to be below the reading head is less and also if we are reading Sectors in succession, it will read faster because of the spinning speed.

If you try to measure the time from a 7200rpm Drive in the Sector we want to meet the reading head is 60 / 7200 = 0.0083 seconds, because the HDD is a CAV (Constant Angular Velocity), no matter where the track is, the speed is always the same. - Transfer Delay - is the time when the data is translated and send it to the computer. Most of the time, it is almost does not exists since it is operating at the speed of Controller in HDD which is in the range of 100-200MHz.

From all of them, you can see the time we could be spent waiting is 0.025 + 0.0083 = 0.026 seconds or about 26ms. Well, that's pretty fast...

The problem is, your 4.0GHz CPU took only 0.00000000025 seconds to do one operation!!! As of now, if you Ping a Server in nepal - you will get around 3-5ms response time, in other words - the time it took for data to got to server and back is 10 times faster than your HDD!!!

A little more:

This is the Worst Case Scenario of Partitioning.

Image from this thread .

You Probably wondering that the track the outermost, should be able to store more Sectors than Track in the end. But why in the sample picture, the tracks are divided equally. This method is called Zone Bit Recording. It is because length of the track near the center is shorter than the tracks outside and the hard disk is CAV.

And this ZBR thing is why we shouldn't partition the HDD as well - because if we split it in half, let's say C 500GB for Windows, D 500GB for Games in 1TB HDD - The head will always have to move about half the distance in order to get Windows stuff and then move about half to read the game stuff in D. Even worse, the game in D drive which is stored physically on the second half in the inner tracks - the read speed is slower!

So can't we make the hard disk faster anymore?

From here, it can be seen that What we can do to make any Drive (in the past, Tape and Disk) run faster. is to find a way to reduce Seek Time and reduce all delay, but its real problem is because we still have to rely on the real-world mechanism, No matter how hard we try - it is difficult to make it run at a speed similar to an electric circuit (remember, electrons travel at the speed of light!)

The correct solution is, we should make a Drive that stores data without using any mechanical parts. That's why we have been trying to make SSDs since 40 years ago.

But why don't we all use SSDs since 40 years ago? Because it's expensive! In other words, no one is willing to pay enough money for that technology to go mainstream (lack of Economy of Scale)

Before the SSD price came down to a tangible level (about 10 years ago, I bought an 80GB HDD Laptop for Rs. 30,000 ~ now we can get 500GB SSD for less than Rs 10,000). We did try to hide the seek time and all of the delays with Cache

First Attempt: Cache

Now that we know that HDDs are very slow (and it's been the slowest device in computers for quite some time), all we can do is avoid using them as much as possible.

But first, you need to know that Cache and Buffer are different. For HDD, most of them say 'Cache' (eg 128MB Cache), it is Buffer, which is the RAM on the HDD that holds data while waiting to be written.

As in the previous section, we discussed that the HDD takes at most 26ms to wait for the sector to be under its head. Without buffer, your computer will have to wait for this operation to complete, which means your computer will have to hang for 0.026 seconds. What if we write a lot of data and it takes longer time like 20 seconds? Most people will simply switch off the computer already.

Therefore, when we write data The data will be held in the buffer first to allow the CPU to go back to doing other things while the HDD do its thing. This is why we were told to not switch off computer without shutdown, because there may be data stuck in the buffer that has not been written to the actual disk, which will disappear because there is no power to it. (RAM needs power all the time)

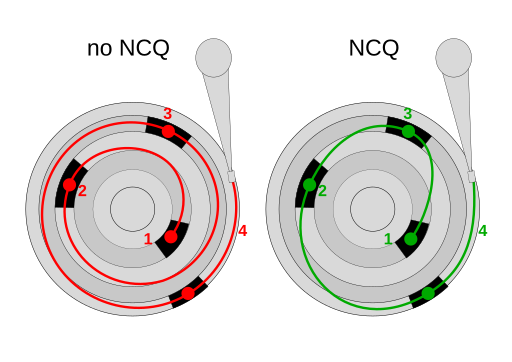

Smarter HDD also do NCQ = Native Command Queuing too, which HDD will wait a bit for buffer to fill then find the shortest movement of the head to finish writing/reading data as requested in the buffer.

Cache performs another function completely differently.

Cache stores information that we have just used. (Mostly stored in RAM) if we use the same data over and over again. We don't have to read from Disk, just take out from Cache without having to go to read from Disk

Of course, if the HDD is smart enough , it can also take the data that is still in the Buffer and send it back too - as far as I know, Seagate's HDD is doing this. You will see that HDD Seagate has 128MB of Cache.

Then Cache will increase the speed at any time...when we read the same data over and over again. Of course, the larger the cache, the better because there is a higher chance of Cache Hit

The most effective method of caching is that we must be able to predict the future, read the information that will be used in the Cache in advance, and if the cache is full, discard information that will not be used in the future away from the Cache. Of Course, such prediction is almost impossible (but we can partially "guess"). If interested you can read about: LRU, LFU, MRU

In Windows itself, it also builds its own Disk Cache, so DOT NOT BUY Disk Cache program to use because it will just overlap with Windows itself.

There was a time when Windows invites everyone to insert an SD Card into the Card Reader slot to do ReadyBoost as well

Another Attempt: ReadyBoost

Cache, most of the time, we will do it on RAM because if we take another HDD to Cache another HDD, what is the benefit of it?

ReadyBoost's idea is to use SD Card, USB Thumbdrive to make more cache, that's because Flash Memory which is in SD Card and USB Thumbdrive/Flashdrive at that time it started to be cheap. And the size is starting to be in the GB range, plus almost everyone has a digital camera (also at THAT time), there is definitely at least 1 SD Card for each person who owns a laptop lying around unused.

Windows also has a capability called SuperFetch, which will load the data it thinks we will use often based on past usage, data and put it in this ReadyBoost Cache as well.

The good thing about Flash Memory is that it's already a "Solid State", - it has no moving parts, no mechanics, so it's very good at reading random data because its "Seek Time" is lower than HDD, making it suitable to be used as a cache to help HDD

From the test in those days, it was found that it reduced the application load time by about 15%, especially the machine with less RAM. But it's not as good as having more RAM because if more RAM is available, the free RAM will be used as a cache that is faster than Flash Memory anyway.

(Image from Anandtech : Windows Vista Performance Guide)

Then came the Persistent SSD Cache.

After Flash Memory became mainstream and we all buy (and lost) USB Drives like crazy, it has resulted in the price of Flash Memory being cheaper because more and more people buy it.

But it's not cheap enough to make 500GB SSD Yet (there are people who make disks that insert many SD cards into it. can be made into an SSD too ) but still expensive. They can only do small, such as 16GB, 32GB, 64GB SSD and it still cost about the same of the laptop itself - so it is the era of Persistent SSD Cache first.

If you still remember, there was an Intel Smart Response Technology, which is now renamed to Optane, but the principle remains the same, which is to take smaller SSD which is faster to speed up HDD, go see Linus in his "golden head" days explain:

And if you are a real fan of LEVEL51, you will see that we used have the option to enable SRT as well, that is, we can divide the space on the SSD to make cache from 15-60GB (this SSD also use to install Windows) But now it's removed because the current SRT can only be used with Drive Optane.

(Additional: Optane (3D XPoint) is another type of flash memory that can "seek" faster than current NAND flash memory.)

One thing to note, RAID 0 does not really help increase performance that much. As you can see, even with RAID 0 the SSD (cached) is still almost 50 times faster!!!

| WD Black 7200rpm 500GB | Seagate 1TB | Seagate 2TB RAID 0 |

As for now, if you want to make SSD Cache yourself, you can use an add-on program like PrimoCache to do it.

Final Attempt: Solid State Hybrid Drive (SSHD, FireCuda)

As for the Hard Disk manufacturers, they also do SSD Cache too.

What they did is to put the SSD in the HDD and make the Persistent SSD Cache at the controller level in the HDD, which is probably the best because The manufacturer itself should know their HDD better, and should know what should be cached, what should not.

Which Seagate is probably the only one currently that has a HDD like this for sale in the name of FireCuda. Previously, there was one from WD with 128GB SSD inside - but its actuall SSD embeded within HDD.

(Photo from Storage Review )

According to Storage Review tests, having an SSD cache on an HDD increases the speed of reading random, repetitive data more than 2 times (if it's cached, it's reading from the SSD instead of reading from the HDD.)

But now we don't have to fight against HDD slowness anymore, because SSDs are at a price that everyone can afford.

How does SSD make us better?

It can be seen that from all the efforts we have against HDD is the attempt to solve the problem of Seek Time, so how does Seek Time affect our computer experience?

- Get into Windows faster And access various programs faster because the program's files are small files, HDD is slow to read them. Using SSD will make your computer feels faster.

- Wake up from Sleep quickly. Notice that if the machine has a HDD, there will be a motor spinning sound for a while before the laptop turn back on

- Less RAM is not too bad because Windows can move stuff to and from RAM faster so having less RAM will not feel slower that much

- Fragmentation will not affect us When we write small, frequent pieces of data, most file systems in principle use a method to find the largest gap in which to fill that piece of information. The most brutal thing you can do to your computer is loading multiple Bittorrent files at the same time - because the contents of the file will be scatter all over the HDD.

You can see that the Miglog.xml file, guessed by the name, is something that was written in small bits at a time, the file is just 68MB but already spread into 1063 pieces

(if you are still using HDD, you can download Drfraggler from Filehippo , the owner of this picture

So, What are some of the Things to Look for when Getting SSD?

For choosing an SSD, we recommend that you look at two main things namely:

- A brand I've tried to take a risk with some weird brands on Ebay before. Such as KingSpec or Kingdian which is very cheap at the time.

I could install Windows, but after used for a while my laptop would freezes or slow to a crawl. Which is the hard way for me to learn that Flash Memory has a matter of chip quality as well! It might be an SSD, but using low quality Flash Memory, it's slow anyway. As you can see from this GPD model - it has high sequential read sped (SEQ) but very slow 4K speed, our Crucial SSD are about 10 times faster!

- Random Read/Write speed when we buy an SSD because we want the machine to feel faster. What we'll need to look at is the Random Read/Write speed based on the reviews, not the advertised speed of 1,000-2,000-3,000MB/s on the box, which is the theorical maximum speed that rarely happen in real life.

On our Laptop Configuration page, we have this information for you to help to choose the most optimal one for your usage. As you can see, Samsung Drives has High "advertised" speed but the Random Read/Write speed is almost the same as Crucial/Patriot drives which are cheaper.

There are some other things to note too:

- SLC MLC TLC QLC This is a technology of Flash Memory (NAND) chip that it is a channel of 1, 2, 3 or 4 bits, the more it can store, the drive will be even cheaper. But its downside is that it will be slow

- Write Endurance is how much data can be written to the SSD before it went bad The unit is usually TB = TBW = Terabytes Written (1 Terabyte = 1000 GB, not 1024GB), meaning that if you have 100TBW Drive you can write 100,000GB of data before it fail (note that we usually writes about 20-30GB/day)

This value may be written in another way. (T)DWPD means (Total)Drive Write Per Day. For example, if a 500GB SSD has a 5 year warranty, say 0.5 DWPD , it means that in a day, we can write 250GB of data if we expect it to last 5 years, etc.

Write Endurance is something that many people worry about too much. I have an old OCZ Drive which only allow for 0.04DWPD (20GB/day) for 3 years - which is very little. After I have been using it for work/video editing - turned out I only have written 6000GB over about 4 years of use and lifetime is still at 97%

- Random Read/Write IOPS - This one is for comparison. If we use Random, how many instructions can it process per second (I/O per second), now it's in the 100,000 IOPS or 100K IOPS? And if it's NVMe, most of them have more IOPS than SATA.

So which LEVEL51 do you recommend to use?

- We should be investing in NVMe SSDs in our primary drive to keep our Windows and work files. (Often stored in My Documents on the same drive as Windows) because of the more reliable SSD and the use of SSD will make the machine work faster. Feels much faster when working.

- If you are mainly playing games There's no need for expensive NVMe SSDs. You might be temped to use HDD to keep games and invest in small SSD for Windows - trust us - just get one SSD so you dont have to manage it and leave everything on one drive and save yourself from headache.

- For professional video editing, it's best to go for a SSD with a lot of TBW, because we already know that we will write and delete it often.

That's it - now you know why we should all use SSD - please like and share this post if you found it useful!